Introduction

Diffusion model has proven to be a very powerful generative model and achieved huge success in image synthesis. One of the most widely known application is the synthesis of images condition on text or image input. Models like ... take one step further to generate short videos based on instruction.

Meanwhile extending this power model to 3D is far from trivial. It can be represented in point, voxel, surface and etc. Unlike image data, all these forms are less structured and should be invariant w.r.t. permutation. Each of the above data structures requires different techniques to deal with(i.e. PointNet for point cloud, graph neural network for surface data etc).

Point-Voxel diffusion

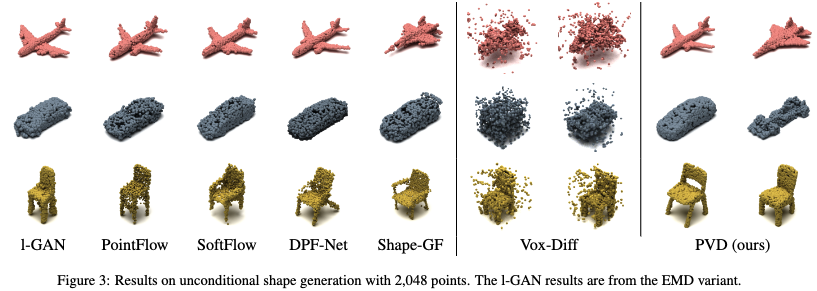

Direct application of diffusion models on either voxel and point representation results in poor generation quality. The paper Zhou et al. proposed Point-Voxel diffusion(PVD) that utilize both point-wise and voxel-wise information to guide diffusion. The framework is almost exactly the same as DDPM except for the choice of backbone network. Instead of using U-Net to denoise, the paper applied point-voxel CNN to parameterize their model. Point-voxel CNN is capable of extract features from point cloud data and requires much less computational resource than previous methods(i.e. PointNet).

Quick review of DDPM

Here, we quickly review the training and sampling process with diffusion model. The DDPM parameterize the model learn the "noise" and the paper used a point-voxel CNN to represent . The loss function is

WIth a trained model , the sampling process is

where .

In the context of this paper, is point cloud data.

Experiment

In the experiment part, the paper tested the algorithm on tasks of shape generation and shape completeion. It examined metrics such as Chamfer Distance(CD) (opens in a new tab), Earth Mover's Distance(EMD) (opens in a new tab) and etc.

Diffusion Probabilistic Models for 3D Point Cloud Generation(DPM)

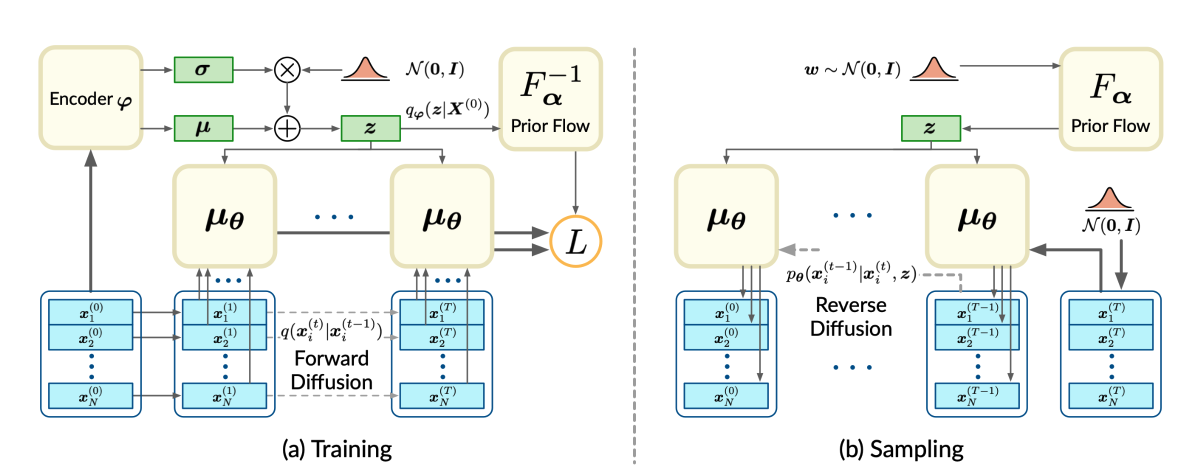

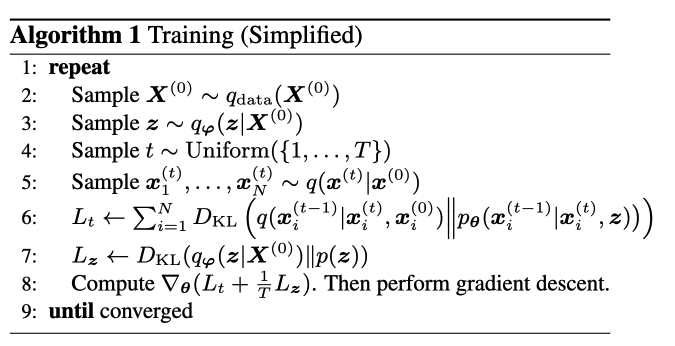

The paper Luo and Hu proposed a framework that introduced latent encoding to the DDPM. The diffusion process and reverse process still happens in physical space, not in latent space. The latent variable is used as a guidence during the reverse process.

For clarity of discussion, we denote to be a 3D shape in the form of point cloud and for represents each point in the point cloud. Let be the distribution of shapes and be the distribution of points in arbitrary shapes.

Formulation

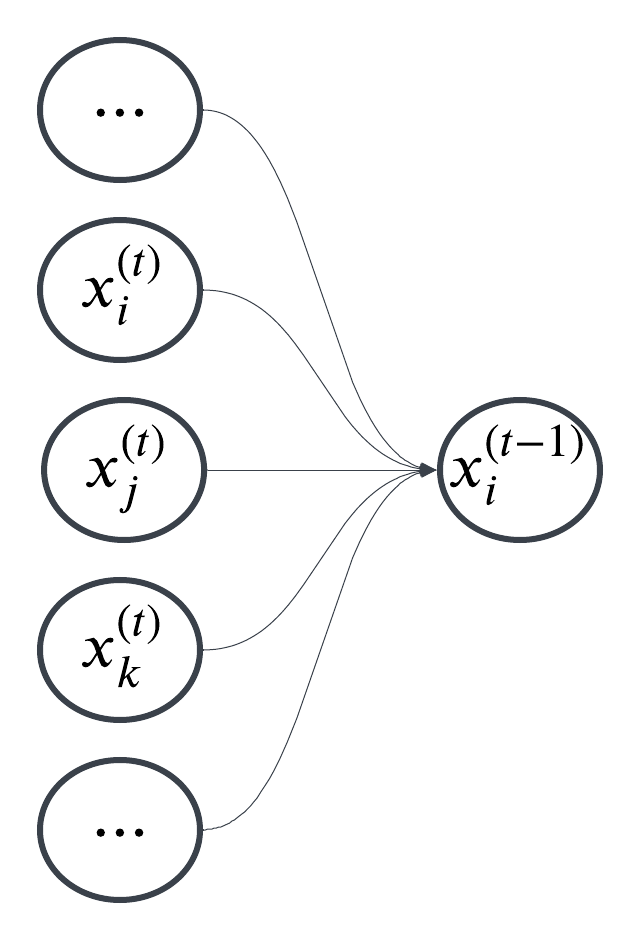

The paper introduced a latent variable . However, instead of apply diffusion process in latent space as in Rombach et al., it is used only as a guidence during the diffusion . More importantly, unlike PVD or image-based diffusion model, the diffusion process is conducted pointwisely. To be more specific, each point in a point cloud is diffused separately . The paper further concluded that points in a given point cloud is conditionally independent(given the point cloud/shape) and, mathematically, can be formulated as

This is also shown in the following graphical model.

In comparison, the PVD update each reverse diffusion step through updating all points simutaniously and can be viewed as following graphical model.

The forward process is the same as DDPM or PVD which is simply adding noise to the points. The reverse process is formulated as

In this setup, represent an image's probability under the learned model and, in below, we will use as the encoder. For simplicity of discussion, we will use to replace .

Training objective

Similar to DDPM, the log-likelihood of reverse process can be lower bounded by

The process is parameterized by and the variational lower bound can be written into KL divergencies,

This objective function can be then written in the form of points(instead of shapes) as follows,

Another important point about this paper is that it does not assume the prior distribution of the latent variable to be standard normal. Instead, the algorithm needs to learn the prior distribution for sampling. Therefore, both side of involves trainable parameters. An encoder is learned by parameterizing it with . An map that transform samples in standard normal to prior distribution is learned by parameterizing it with a bijection neural network and .

Latent Point Diffusion Models(LION)

The latent diffusion model Rombach et al. in image synthesis conducted the diffusion process in the latent space. The paper Vahdat et al., unlike previously mentioned PVD, applied the similar spirit to the 3D point clouds.

Formulation

The model is consist of a VAE to encode shapes to latent space and diffusion models that map vectors from standard normal distribution to latent space.

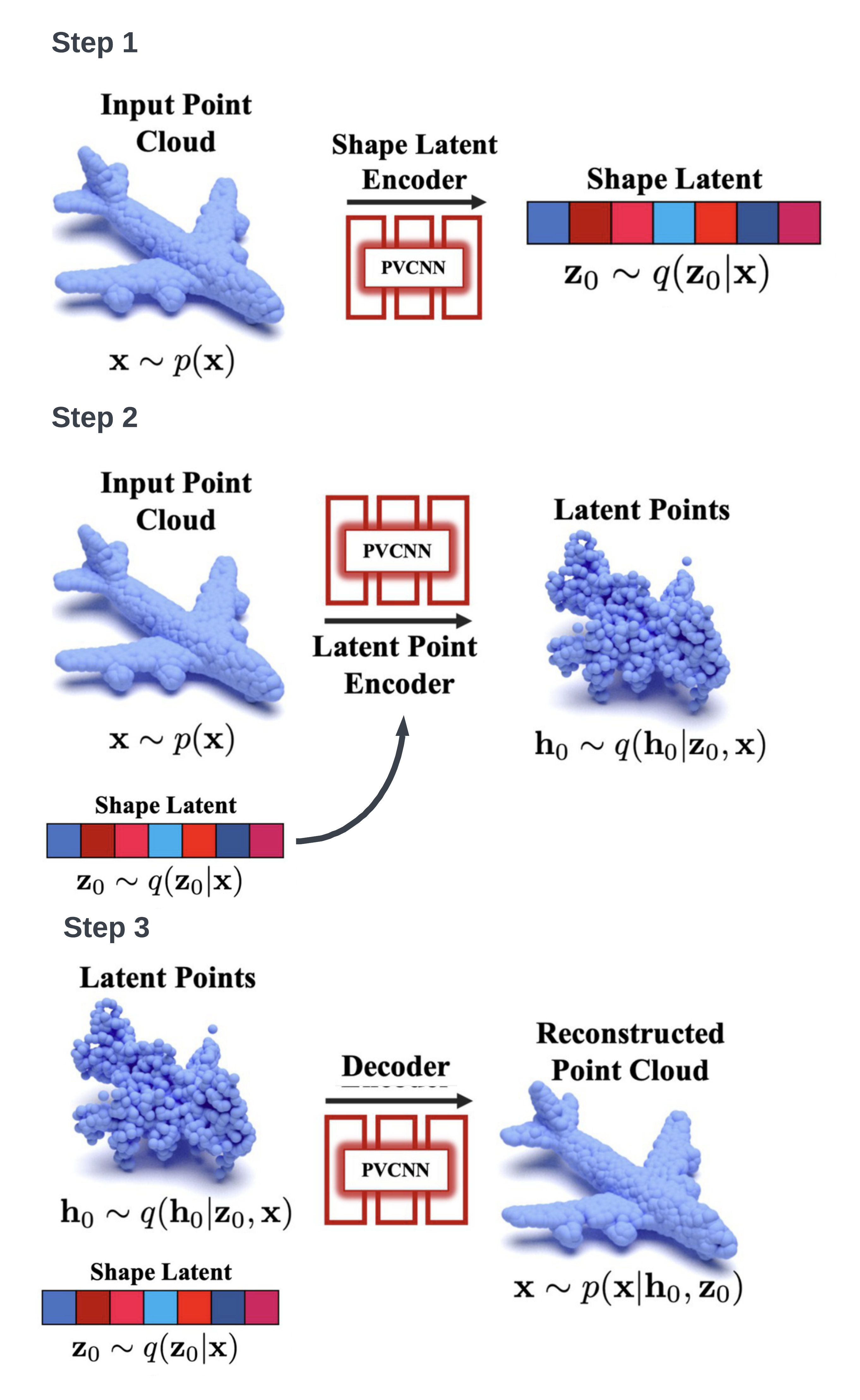

Stage 1: VAE

The VAE part, the encoding-decoding process has three steps:

- Use a PVCNN to encode the whole point clouds into a latent vector (shape latent) .

- Concatenate shape latent with each point in the point cloud. Then use a PVCNN to map point clouds to latent "point clouds" in latent space.

- Decoding from the concatenation of latent points and shape latent.

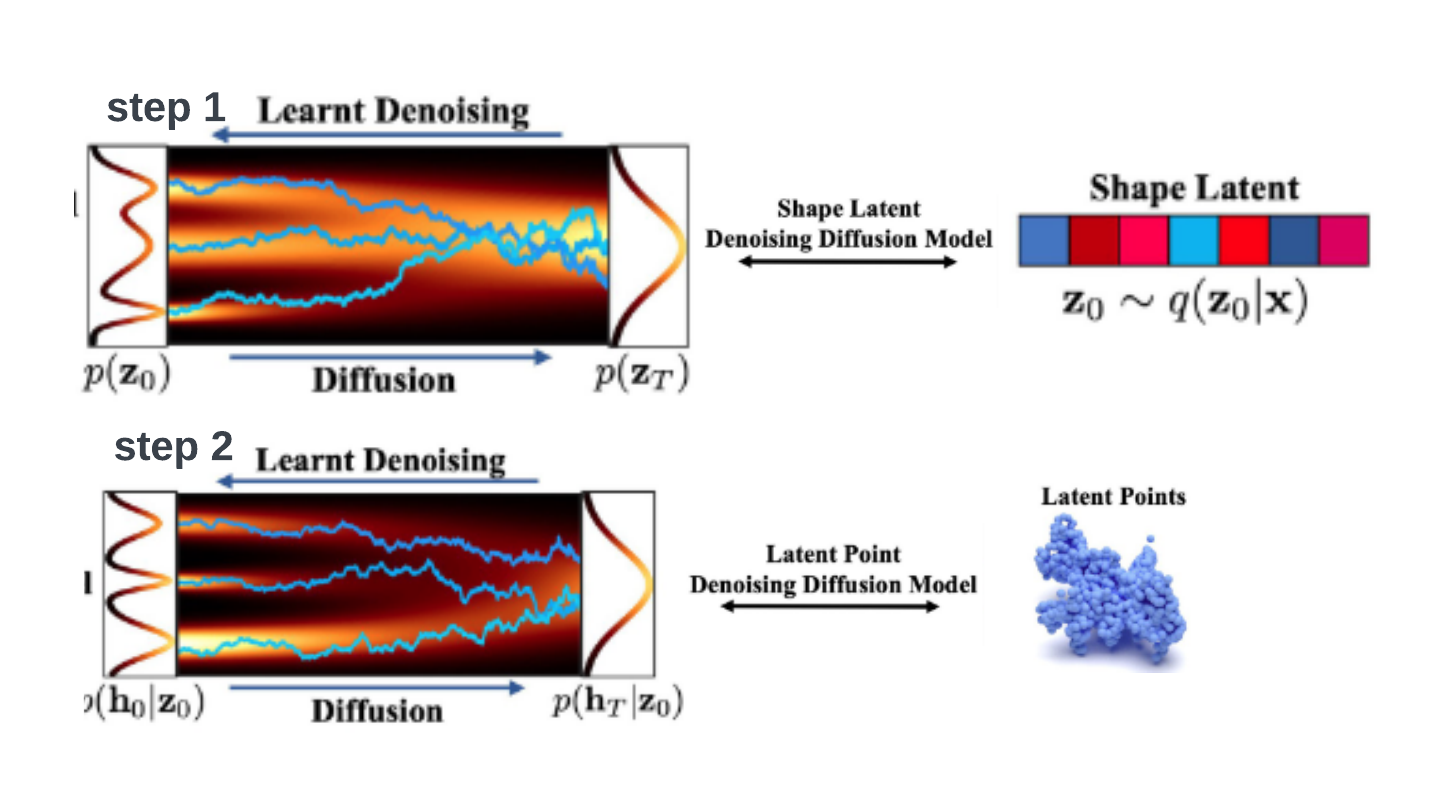

Stage 2: Diffusion

There are two diffusion processes involved since there are two latent vectors. Both diffusion processes start from standard normal distribution and mapped to shape latent vectors and point latent respectively.

Sampling process

The sample generation process is consist of three steps:

- Sample a vector from multivariate standard normal distribution and reverse diffused into shape latent .

- Sample a vector from multivariate standard normal and concatenate shape latent with each intermediate step and reversely diffused into point latents.

Training objective

During the training of VAE, LION is trained by maximizing a modified variational lower bound on the data log-likelihood with respect to the encoder and ecoder parameters and :

The priors and are . During the training of diffusion models, the models are trained on embeddings and have VAE model fixed.

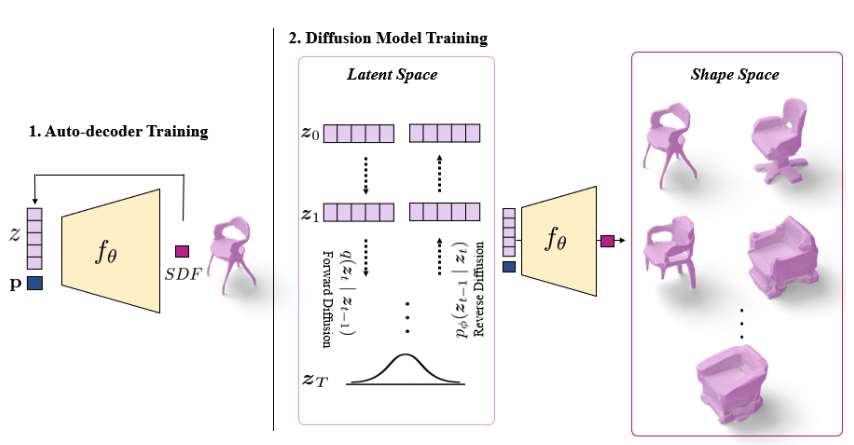

3D-LDM

Many previous works have discussed the limit of different methods of representing 3D shapes. Voxels are computationally and memory intensive and thus difficult to scale to high resolution; point clouds are light-weight and easy to process, but require a lossy post-processing step to obtain surfaces. Another form for representing shapes is SDF(signed distance function), it can be used to represent water-tight closed surface. In Nam et al., the paper proposed using coded shape DeepSDF and use diffusion model to generate code for shapes.

Formulation

Coded Shape SDF: This is a function that maps a point and a latent representation of shapes to a signed distance(positive distance outside and negative distance inside). A set of shapes can be represented with given a latent representation :

Training setup: DeepSDF has shown that can be trained efficiently in an encoder-less setup, called an auto-decoder, where each shape is explicitly associated with a latent vector . These latent vectors are randomly assigned at first and learned during training process. Therefore, the algorithm is consist of two parts:

- Auto-decoder for neural implicit 3D shapes.

- Latent diffusion model for generating latent vector.

For the first part, the paper parameterizes the SDF with and represents latent vectors. The objective function is following regularized reconstruction error:

Remarks:

- The latent vectors was unknown before training and is randomly initialized. The training process learns both and at the same time.

- The training data is sampled through algorithm discussed in DeepSDF section 5. As implemented in DeepSDF, the data points are sampled within a certain box .

For the second part, the paper used the latent vectors generated during the training process in the first part as training data. The diffusion model follows the DDPM framwork and parameterized the model with . The paper claimed that they are following Ho et al. that it is more stably and efficiently to train the network to predict the total noise . (I checked the DDPM paper and did not find related description. Personally, I think it is counter-intuitive to predict total noise and use it as step-wise noise.) In this framework, the training objective for this phase is:

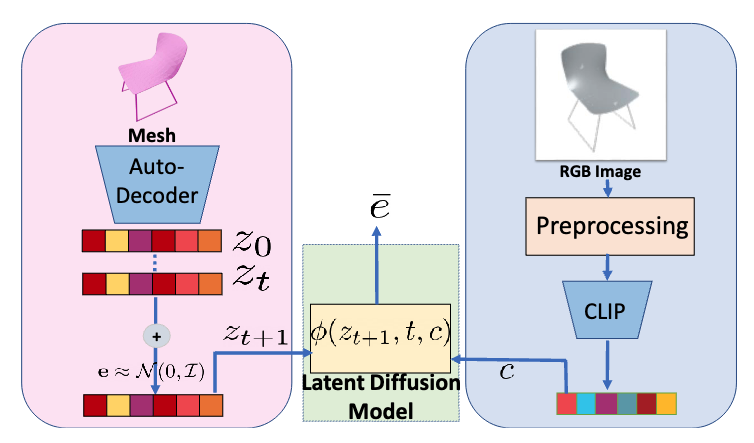

Sampling process

The unconditional sampling step is exactly the same as DDPM. The reverse process is approximated by and is parameterized as a Gaussian distribution, where the mean is defined as

In the cases of conditional sampling, CLIP model is used to generate embedding for conditional information such as text and image. Then, concatenate latent vector with CLIP embedding and the reverse process mean is modified into

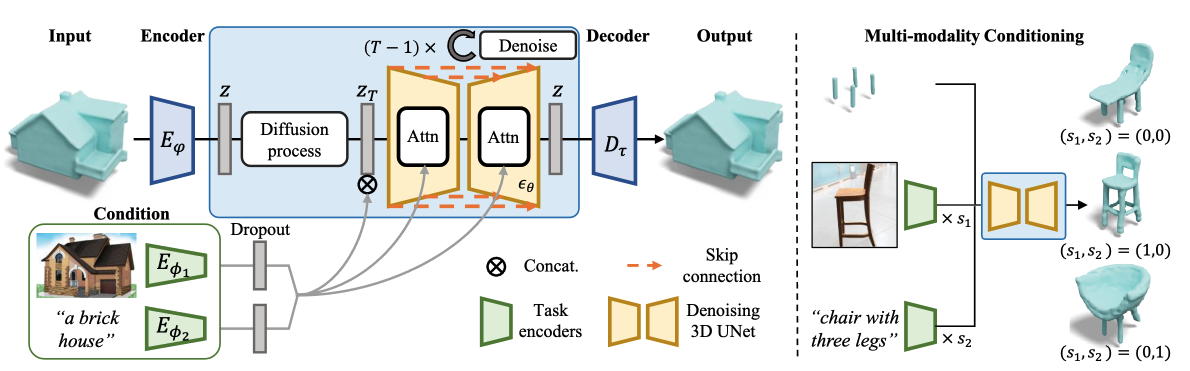

SDFusion

In 3D-LDM discussed above, the model architecture is an auto-decoder DeepSDF that learns the embedding during training. In Cheng et al., the author proposed an encoder-decoder model and, instead of using DeepSDF, used Truncated Signed Distance Field(T-SDF) to represent the shape. T-SDF is a simplified version of SDF and it limit or "truncate" the range of distances that we care about. It models a 3D shape with a volumetric tensor . The algorithm in this paper is quite similar to previous ones and applied a latent diffusion model framework.

Formulation

The algorithm is consist of an auto-encoder for compression T-SDF data and a diffusion model in latent space.

3D shape Compression of SDF: The author leveraged a 3D variation of VA-VAE and, given an input shape in the form of T-SDF , we have

In the above formulation, represent encoder and decoder between 3D space and latent space. VQ is the quantization step which maps the latent variable to the nearestt element in the codebook . are jointly trained.

Latent diffusion model for SDF: This part is exactly the same as DDPM. The paper use a time-conditional 3D UNet to approximate and adopt the simplified objective function

References

(Citations removed)